From now on, if you want to write something you expect to stay private, it’s a good idea to use a pen and paper or something other than your computer. What you say in online meetings can now be transcribed, stored, and retrieved. Even more concerning, anything you type into a document draft you save, including angry drafts, can be accessed by AI systems and potentially disclose what you believed to be private information. The same goes for email messages, sent and received. Deleting files, messages, and meeting information and preventing unauthorized copies are more crucial than ever.

Some executives at my keynote presentations say, “I wish AI would give me answers based on what is happening in our company. I would get so much better results than my generic answers now!”

Their wish is granted. Retrieval-augmented generation (RAG) means that AI can retrieve your organization’s information to provide relevant responses, including what’s happening in your organization. The process is designed to keep the information within your company and not leak it to other companies or third parties.

Some newer workplace AI assistants, like the one you may use today, look at a user’s permissions and then access documents, meeting transcriptions, and email messages that the user can access, all in real time. If you remove a file, usually within minutes, the data is no longer available for AI retrieval. The rest of this article will refer to this newer type of retrieval. If your organization uses an internal vector database to store information for AI retrieval, deleting a source file won’t automatically remove the information from AI responses until the tool explicitly refreshes its index.

But the dark side of this fantastic feature is reduced privacy. The AI tools with document or email access permissions are designed to enhance AI’s responses with information from meetings, emails you send and receive, and files you’ve saved. The AI tools examine all information, including files saved in your online storage that have accumulated over many years. If someone with the right privileges asks AI a question about a topic or person, unless you deleted all instances of the old meeting notes, email messages, files, and other sources of information, what you said in a meeting or typed into an email or a saved document might appear in the results. Angry messages, failed plans, and long-forgotten mistakes can be resurrected even though you’ve put them behind you. Undeleted inappropriate jokes a friend emailed you or private conversations with your loved ones through company email could be exposed, too.

Before going any further, let’s explain what this article covers. When people talk about AI privacy, they are often concerned that what they type into an AI chat tool will leave their organization and show up somewhere else in the world. That’s not what we’re covering here. We’re covering the situation where, although the data stays within your organization, other people in your organization might find out more than they need to know, even without trying. Given a request, AI can quickly return data based on the user’s privileges without the user needing to find a specific file, message, or meeting. Unfortunately, they might see content they never expected or intended to see, perhaps private or sensitive information they shouldn’t have access to, a phenomenon dubbed AI “oversharing.”

This article focuses on companies with multiple users sharing data instead of a single user or a tiny office with users not using shared storage. However, everyone, including single-computer organizations, should read the section below entitled “Potentially Dangerous Third-Party AI Assistants.”

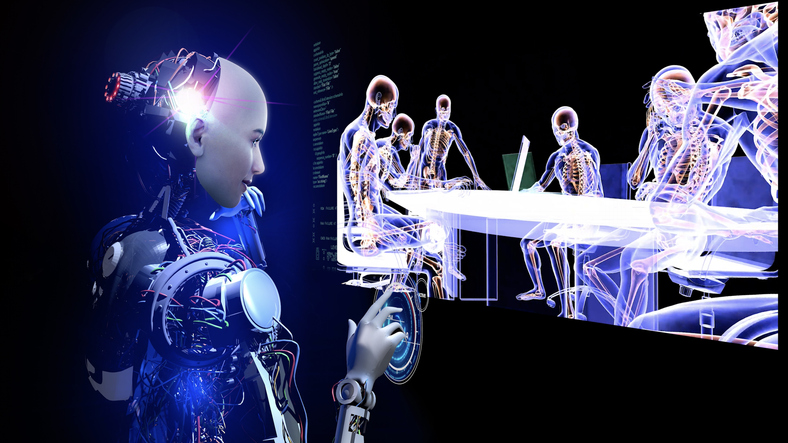

Using AI assistants, information stored in your organization may be available to anyone else in your organization possessing the right access privileges. People no longer need to invest energy to search; as long as they have access rights, they can ask a simple natural language question using AI and find the data in the blink of an eye.

It’s becoming apparent that humans will be forced to accept this reality. Humans must be very cautious about what they say in a meeting or type into a file they save or in an email. Of course, you have no control over what information someone could send you in an email, making the situation worse.

The good news is that AI tools cannot retrieve data once it is permanently deleted from all systems and backups, assuming the tool you are using for RAG only accesses current content and does not save old content. As of this writing, most reputable tools from organizations with household names respect that once a file is deleted, it is no longer eligible for access by workplace AI assistants. However, due to the sheer volume of information accumulated over the years, finding and deleting old files, meetings, and messages could be nearly impossible.

Software and operating systems that support gathering your and your organization’s data to provide more relevant answers (RAG) usually include multiple privacy safeguards. However, protections can be bypassed in certain circumstances, such as an official e-discovery.

The way it typically works is for the AI tools to verify the user’s permissions to data before considering augmenting the response with additional information. When a user asks for information, the system is designed to provide information that the user has permission to see, a process called trimming.

For example, workplace AI assistants integrated with your organization’s email applications have access to your messages. When you ask for information, the AI tools are designed only to give you information based on the contents of your email. Unless you’ve delegated email access to someone else, random people in your organization should be unable to receive answers augmented with information from your sent and received email messages.

However, a technology leader at a leading provider told me that their AI tool does not respect the privacy of a user’s email when there is a misconfiguration or the interested party has elevated roles. He explained that all user email content is available to other users with enough privileges. He explained the trade-off between data access and privacy with this metaphor: Before AI augmentation, he said, finding sensitive data in a company was “like looking for a needle in a haystack” – scattered across random files and email messages. Now, he explained, with AI-powered tools, “you find the needle immediately just by asking a question.” He reminisced about asking one of his technical pros, “Show me email messages where anyone praised our competitors.” He said the results appeared instantly, with sender information fully visible. “The AI tool doesn’t give you a haystack,” he concluded. “It gives you a stack of needles.”

A member of my team and I eagerly visited with AI technology leaders, hoping to persuade them to make conversations completely private for sensitive meetings such as coversations related an M&A, personnel matters that require confidentiality, trade secrets, and new competitive products or services that would harm a company if the details are discovered prematurely. The most senior person we visited, who influences AI privacy at a huge software company, was surprised to hear that I suggested that executives sometimes want discussions in online meetings to remain private forever.

He is not alone in believing that all executive communications should be discoverable. Executives’ knowing that their conversations could be disclosed helps ensure corporate accountability and is a strong deterrent to executive misconduct. Transparency is required by some regulations and even by law in certain circumstances. Some people feel it is unfair for executives to enjoy privileged communications with immunity from e-discovery.

The senior executive with the power to set privacy related to AI emphasized that the whole point of AI ingesting meeting conversations and other data is to make information available for AI processing; any restrictions reduce the tool’s functionality. He explained that this reaffirms the position that productivity outweighs privacy. He acknowledged that there are concerning incidents of oversharing sensitive data to users, and he accurately pointed out that those are often due to their customers not properly preparing, deploying, or maintaining the AI tools and data governance privacy controls.

He retorted that executives who want to have private meetings with undiscoverable content should use some encrypted messaging apps like Signal and not his company’s online meeting platform. He also told me he appreciated my feedback about leadership sometimes needing absolute privacy, and that they’ll consider it.

Yet their position is firm, and companies that use workplace AI assistant tools that access company information must now accept the specific privacy controls of that tool, which may include a significant drop in the privacy of sensitive company information within their company. While I acknowledge that many application providers build in protective controls, the reality is stark: complete privacy of workplace communication is in jeopardy.

There are many examples of data augmentation across the industry. One is Microsoft’s 365 Copilot, which can use RAG to augment responses using information in email, meetings, and files. It provides many advanced privacy controls, including those described below. Some more advanced protections, such as automatically labeling data sensitivity, are unavailable unless your organization invests in the top-tier “E5” license of 365. Companies with the “E3” license must manually label content or risk unexpected disclosure.

Microsoft’s free “Copilot with Enterprise Data Protection” differs from the free consumer version of Copilot in that it requires users to log in with work (Entra ID) credentials. It doesn’t automatically access your organization’s data, and users can only upload files manually for tasks like summarization. Your IT team can configure data loss prevention policies to prevent sensitive file uploads, but the protections aren’t enabled by default, so initially, any file can be uploaded. This free version doesn’t integrate with Microsoft 365 apps like paid Copilot, so it doesn’t provide real-time document editing, Teams meeting summarization, or Excel formula suggestions within your apps. However, it does provide web searches, document summarization, and general chat interactions. While it offers some enterprise protections when configured by IT, it’s not a complete company solution like paid 365 Copilot versions.

Google Gemini is now integrated with Google Workspace and can review and consider information in Google Workspace as it responds to user prompts. Google does not release information to the world by training Gemini on your data, and they provide strong security measures to help keep private data private. But, even with the provided settings, a qualified person in your organization must configure and keep those measures current. Sometimes the default settings favor functionality over privacy, so your team must be familiar with the settings and keep up with them as they change.

From now on, you must carefully choose your words in online meetings and never say anything you don’t want discovered. Content discussed in meetings may be captured in AI-generated transcripts, summaries, or recordings, making even previously casual conversations potentially discoverable in legal proceedings. By default, permissions for AI to return results from the transcript are typically given to all meeting attendees. If someone is invited but late or a no-show at the meeting, avoid the temptation to say something joking or make an offhand comment about them. That person could later want to know if they’d missed anything important and ask AI, “Did anyone say anything about me?” Your comment will be disclosed. Depending on what you said and their level of sensitivity, you might find yourself in an HR nightmare. There is no such thing as ‘off-the-record’ in meetings where AI transcription or summarization tools are active. With some commonly used operating systems and tools, this recording is always enabled and difficult to block.

Distributing AI-generated meeting summaries to participants without a human reviewing them first for accuracy is dangerous. AI is prone to hallucinations and errors in transcription, especially if the audio quality is poor. AI also makes errors when people use ambiguous language, such as “They said it was approved.” Who is “they,” and what did they approve? AI will try to decide, but could get it wrong. Other examples are “We need to address the issue” or “Send it to them.” AI must make a guess, based on the context of the conversation, what “we,” “they,” “issue,” and “it” refer to. Sometimes AI, understandably, guesses wrong, and meeting summaries can include inaccurate information and topics never discussed.

After Abraham Lincoln died, historians discovered in archives that he had written scathing letters to his generals but never sent them. If you sometimes type emotion-filled documents while “venting,” even if you never intend to share the information, the AI tools may index and analyze everything you type in the draft file you save. In an e-discovery situation, or if someone with elevated privileges asks a question, the AI tool could reveal what you never intended to share.

One major provider of applications automatically saves a version history of the previous content, but their tool will use only the current content of the file to respond to a question entered by someone with a high enough security level. Break any habits of saving individual files in names such as “AngryLetter-v1,” “AngryLetter-v2,” etc. If you update a file for tone or accuracy, do so in the current file or delete old versions to keep previous content from showing up in AI answers. These strategies only work if your workplace AI assistant tool only accesses current data and does not store old content. Remember that if your system makes backups of your files, and someone with the capability restores a file you deleted or restores a version before you removed objectionable content, the information in that restored file may be available as if you never erased it.

Removing old email messages from showing up in responses can be slightly trickier since AI may respond with information stored in your deleted items folder. You must remember to empty your deleted items folder, or your IT team can set up specific retention policies that permanently delete email messages after a set date or message age. Of course, as with files, if the email messages are backed up somewhere and restored, the restored versions may appear in responses to AI prompts. And this also assumes that your workplace AI assistant tool does not save old messages elsewhere for retrieval. As of this writing, one of the largest workplace AI providers respects that boundary and doesn’t save snippets of data after the source is deleted.

The goal isn’t to scare people away from using AI tools. It isn’t easy to turn off AI’s reading and recording anyway. Your safest bet is to behave as if everything you type or say will be available for easy retrieval by unexpected people.

Let’s cover some things you can do.

Be sure your IT team uses governance and privacy protections such as:

DLP: Major enterprise software providers have highly effective data loss prevention (DLP) tools that help keep private information private and allow access only to people with specific or enough privileges. However, DLP systems are only as effective as their configuration and upkeep. IT professionals, compliance officers, and other privileged users typically have access to the DLP system and can circumvent restrictions and access data anyway. If users save documents in unprotected locations, DLP might be unable to protect the data.

Data Sensitivity Labeling: Most enterprise AI assistant providers explain that their tools respect file permissions and features like Data Sensitivity Labeling. You and your users can specify data labels for your content, such as “private” or “confidential,” to further restrict who can see what data. However, if someone opens an e-discovery, all undeleted data is potentially available. Thus, nothing you say or type is wholly protected if the data still exists.

Retention Limits: A representative from a major tech company suggested that executives can avoid e-discovery exposure of what they say in sensitive topic meetings by setting retention limits on meeting notes, files, and email. After the retention period, the system will erase the data after a mandatory holding period. Erased data will no longer appear in results if your AI assistant doesn’t save snippets of data elsewhere. However, it can be frustrating not to have access to old documents and meeting summaries after a retention policy triggers their deletion. He pointed out that if a meeting attendee puts notes or a summary in the meeting chat, that chat information will not be purged. If someone asks about the meeting in Copilot or during an e-discovery, the process will access the data saved in the chat. Remember to ensure the automatic deletion includes deleting all logs, training data, and monitoring records when setting retention policies. These may contain sensitive data in prompts or summaries, even after the original content is deleted.

Why Deletion May Not Be Enough: As mentioned throughout this article, remember that one of your best protections is deleting files, chats, messages, meetings and backups you don’t want AI to use in responses. However, the effectiveness of this strategy depends on whether the tool’s RAG features save information elsewhere even after you’ve deleted it.

Potentially Dangerous Third-Party AI Assistants: An IT Professional at one of our best customers called me last week in alarm because he noticed a new app on their system had rights to scour their email messages and file storage. What used to be a third-party meeting assistant tool has “upgraded” its feature set to include a system that performs an AI search across documents, notes, and email messages. When a third-party meeting tool accesses your file systems and mailboxes, do they save any snippets of your information on their company’s servers? If so, do they encrypt the data and automatically erase the data from their systems when you delete a sensitive file or remove an email from your account? Can they provide a log or audit trail of who accessed your data? Do they train their tool based on your data, potentially exposing your data to their other customers? What happens to your data if you stop using their product? How do they define what data is yours vs. their data? The tools may also offer to gather information from other third-party note-taking tools, CRMs, and users using other operating systems. From a functionality perspective, there is great allure to having an AI assistant so familiar with everything in your work life. However, it is also a privacy nightmare if the system ever over-shares sensitive information, if the third party gets compromised by threat actors, or if your organization loses visibility into where your sensitive data is stored and who can access it. Before enabling tools like this, you must thoroughly vet the third party to determine if they have the necessary security controls in place and will maintain the security of your data. Remember the saying, “your organization’s security is only as good as your third party’s security.” To help stop employees from unknowingly giving outside apps access to your company’s emails, files, and other sensitive data, ask your IT team to change the “Allow User Consent” Settings from the default to require administrator approval before any third-party app can access company data.

Outside Parties: Another risk is that if any of your workers sent the data or made it available to an external person, it might be in their system too and be exposed by their AI someday.

AI Incident Response Plan: Develop a thorough incident response plan for AI incidents. Plan now how you will manage situations related to AI crises, such as unauthorized data leakage, undetected hallucinations, discrimination (bias), security issues such as prompt injection, and insider misuse. Include your legal and regulatory advisors during planning, as they can address their appropriate obligations.

Security Considerations for Incident Response, HR Investigations and more: Many organizations use ticketing or helpdesk systems that weren’t originally designed to handle sensitive issues, including cybersecurity incidents, HR complaints, and insider threats. Examples include Jira, ServiceNow, or Teams/Outlook. Those systems are integrating AI features. If you allow AI tools to automatically index your primary helpdesk system, they may unexpectedly augment responses and disclose sensitive investigation content to unauthorized users. This creates risks such as exposing privileged communications with legal counsel, compromising the integrity of confidential evidence, and disclosing sensitive employee information. Instead, use a completely separate access-controlled case management system for incident response, HR investigations, and other sensitive matters. Ensure this system is excluded from AI indexing and augmentation. Work with your legal and compliance teams to isolate the systems, enforce strict access policies, and apply appropriate retention and audit log controls.

In case it comes up in a conversation with your IT pros, Microsoft allows administrators to configure “Azure AI Search” indexing restrictions to help prevent AI from accessing specific data, such as files, emails, calendar events, and meetings. However, blocking indexing has negative consequences such as breaking searches for text in email message bodies in Outlook on the web, content inside documents such as Word, Excel, and PDFs in the web apps, and Teams online.

Know that your IT team is already very busy, and adding AI governance to their responsibilities may require removing something else or outsourcing.

As time passes, AI will gather more information from your existing documents and data (this gathering is called RAG), including what AI thinks was said at all meetings. People will become more aware of the new normal in privacy. Unless you are positive that you can and will permanently delete all history, be careful about anything you say in online meetings or type into documents or email. Use words and sentences that will reflect well on you and others in case someone with enough permissions asks AI what you said.

For better, worse, or both: AI is listening. Protect your privacy before it is too late.